Distributed computing environment

Practical realization of neural network optimum configuration finding procedures and its training need considerable computing inputs. Thus, time needed for single finding iteration using modern computing aids can be hundreds hours. Alternative decision is to use distributed computing environment for neural network training. It forms from local area network, consists of some PCs, connected via communication channel.

Topology of homogeneous computer network id unibus. Efficiency analysis showed that optimum PC number, which provides high capability, is determined by training attribute vectors number, each PC capability and communication channel characteristics.

Distributed computer environment was built on Ethernet technology. In this case neuronet training algorithm is realized in 1…5 nodes of local network and statistical modeling in some other nodes made in parallel. Experimentally showed, that one iteration run time is lowered in several times.

Distributed computing environment is indispensable while recognition process statistical modeling used for recognition state parameters detection.

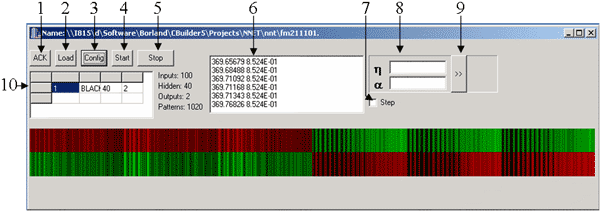

Supervising software, which manage distributed computing environment functioning.

| 1 | - | Server initialization management |

| 2 | - | Projects upload management |

| 3 | - | Neuronet configuration translation to servers |

| 4 | - | Neuronet training process start |

| 5 | - | Neuronet training process finish |

| 6 | - | Training errors output box |

| 7 | - | Step training switcher |

| 8 | - | Neuronet training parameters input boxes |

| 9 | - | Training parameters values transfer to server button |

| 10 | - | Available servers list |

References

- Аракчеев П.В., Бурый Е.В. Обучение нейронных сетей с использованием интегрированных вычислительных ресурсов // Нейрокомпьютеры и их применение: Труды VI Всеросс. конф. – М., 2000.

- Arakcheev P.V., Buryi E.V. The Distributed Implementation of Neural Networks Training Algorithms // 8–th Int. Conf. ACS’2001. – Szczecin ( Poland), 2001

Русский

Русский